Artificial Intelligence and Machine Learning have moved from being niche technologies to revolutionizing the world. Businesses across industries are increasingly relying on AI/ML to improve their functions. This is evident by the global investment (US$ 94 Billion in 2021) that’s gone into these technologies. However, rising business dependency on AI/ML means it must consistently deliver. That is easier said than done, as there is plenty of room for improvement.

AI/ML development companies are under immense pressure to enhance the functional efficiency of their automated solutions. However, the key to improving AI/Ml models lies in training them with high-quality data. That happens via data annotation and data labeling.

Read on to know all about data annotation, how it’s performed, how labelling and annotation differ, what benefits they can bring to a business, and why so many enterprises go for data outsourcing services.

Data Annotation and Labelling: What Are They?

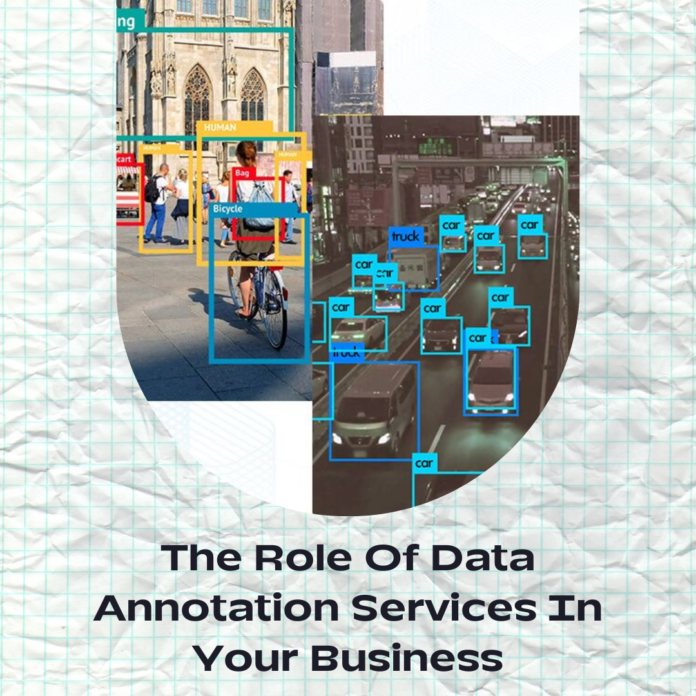

Data Annotation is the process where a person, usually a qualified AI professional, tags various types of data so that an AI/ML model can recognize them and utilise them for prediction generation, decision-making, etc. The primary goal of data annotation is to identify the relevant data for a model, making it a prerequisite for Ml models.

Data Labelling is similar to data annotation in that there’s tagging of the target data involved, but there’s also the addition of the target subject’s metadata to it. Its goal is to recognize patterns in the available data, such as relevant features, so that the required algorithm can be trained using it.

In real-world situations, however, there’s hardly a difference between the two processes, so much so that the terms are used interchangeably. The slight differences come with the style of the method applied and the type of content tagging used. While training ML models, they can be used together to create more accurate ML training data sets.

The Necessity of Data Annotation and Labelling

Humans develop a skill by practising it regularly and incorporating changes at each iteration to improve. AI/ML algorithms function similarly as they are made to resemble human intelligence. This is unlike classical computers, which are the norm, where every task has to be programmed into it. This is a fundamental shift in technology that AI/ML represents, but unlike humans, they are machines. They don’t possess the intuition or awareness of their surroundings to instinctively pick up on and understand them.

Accessories like cameras, microphones, and other sensors function as their senses and provide the necessary input data for a machine to process. But it won’t be able to make sense of that data unless it’s trained to recognize it for what it is. Here’s where annotation and labelling come into the picture. Annotators use a set of techniques to mark the target subject in a data set to help it distinguish that from the rest. This is what is known as a training sample.

The algorithms are then fed many such samples to help them become familiar with that target subject. It slowly gains the ability to predict and distinguish it, after which the process is automated so that the algorithm can learn from thousands to millions of such examples.

Very large datasets are used for this process, some of them proprietary while others are open source. Microsoft’s COCO (Common Objects in Context) is an open-source system that aims to help image annotation services professionals with over 2.5 million images of 91 objects that are easily distinguishable.

Labelling helps the algorithms establish patterns between the different data samples they use to train so that they get better at contextualising whatever subject or theme of samples they are working with.

On the other end, annotation and labelling are used to produce samples to test the algorithm for detection accuracy. The output of the algorithm is compared with the annotated/labelled sample, and the process is repeated until a desired level of accuracy is obtained. While this testing process is done manually initially, it is automated once the accuracy of detection becomes sufficiently high. Repeated learning and testing over time make the algorithm more accurate and reduce the time it takes for it to detect the target subject.

Therefore, it is not wrong to say that data annotation and labelling are at the core of AI/Ml development since they make it possible for the machine to analyse data and extract valuable information from it. They make it possible to gain the high-quality data necessary to create an accurate AI.

Deep Learning, Neural Networks, and Computer Vision

Data annotation and labelling are better understood in the context of the underlying technology they serve. As mentioned earlier, this form of computing is different from the regular or classical form because the underlying hardware itself is designed differently, leading to an entirely new class of processors called Neural Processing Unit (NPU).

The NPU shares some functionality with a regular Central Processing Unit (CPU) of a computer in that it performs the main functions of the ML/AI models. The internal circuitry is made to resemble the human brain with its components connected, similar to how brain cells (neurons) are connected to form a network. Hence the name neural network!

It is this neural network that enables these machines to learn. The NPU works in conjunction with the CPU to accomplish its tasks. For visual data recognition, it also works with a Graphics Processing Unit (GPU).

Deep Learning

Data Annotation and labelling aren’t just confined to the recognition of simple patterns because AI/ML are used to perform sophisticated tasks too, like predictive analysis and natural language processing (NLP). ML/AI algorithms can perform these tasks only when trained with the appropriate complexity.

Deep learning is applied to accomplish this type of learning. It is a form of machine learning that automates predictive analysis and improves a model’s recognition capabilities via the establishment of a hierarchy of algorithms. Each algorithm in this hierarchy is stacked according to increasing complexity and abstraction. This is in contrast to the algorithms of regular computers that take a linear approach that maintains the complexity throughout.

Deep learning is behind the automation of machine learning as it lets the algorithm itself annotate and label the data it needs and improves that process until it is good enough to train itself based on it. Hence the use of the term deep. Manual annotation/labelling is necessary here to feed the initial stage of the deep learning hierarchy and to produce the test samples it needs for verification and validation.

Computer Vision

This aspect of AI/ML pertains to its visual data comprehension and analysis features. Computer vision aims to help machines see, interpret, and understand their environment (input data) the way humans do. While the attempt is to enable algorithms to process all types of visual data, recent trends have focussed on facial recognition and object identification at varying speeds. They find use in applications like security and autonomous vehicles.

It is made possible by image annotation service experts who acquire the necessary data in the form of images, videos, 3D scans of objects, etc., and then annotate and label them using boundaries to distinguish the target object in the data.

Accuracy is said to have been established when the algorithm can successfully interpret a test image and identify the required target subject/object in it. In some cases, all components of an image may be required to be identified, as with self-driving algorithms, for example.

Types of Data Annotation

AI is used for various applications, necessitating a flexible approach to its development strategy. Data annotation and labelling follow this established direction since the process occurs intending to recognize a predefined target in a data set. This need for variety has given rise to numerous annotation techniques that vary based on many parameters and functions.

Here are some of the common ones in practice:

Image/Video Annotation

-

Classification

The simplest form of annotation there is, classification consists of tagging an image so that the algorithm can recognize the entire image with it. It also serves the purpose of helping the AI detect similar images in a data set. Data abstraction is also achieved with this, as the AI will be able to identify a given target subject in a new image based on its familiarity with it in a training image. Classes are assigned here on a superficial level as there is no need to probe the subject further and extract more information about it.

-

Object detection and recognition

It is a step up from the classification type of annotation where additional information about an annotated subject is considered besides its class. The common types of added information considered are its location, quantity, colour, and size. Since the collection of this additional information requires that the AI distinguish the subject from the rest of the image more precisely, boundaries are used, which tend to be polygons of various shapes. The advantage that this type of annotation offers is the ability to detect multiple classes in an image.

-

Segmentation

As the name suggests, this type of annotation is used to create multiple segments from an image/frame. Image and video annotation service experts define the required subjects in an image/frame on a per-pixel level by attaching each one to a class or object. This action makes segmentation the most accurate type of annotation but also compounds the complexity. Segmentation contains these three sub-categories:

- Semantic segmentation

Semantic segmentation helps provide context to what the algorithm is viewing in an image or frame by adding various boundaries between the objects present. It helps distinguish similar objects and gives precise information about them like presence, shape, size, etc.

- Instance segmentation

Instance segmentation is used to recognize the presence of objects and their location in the image, along with the other information about it captured in Semantic segmentation. It is most helpful whenever there’s a need to filter out unwanted information from an image or isolate the target subject from the background.

- Panoptic segmentation

A hybrid of the previous two types, panoptic segmentation provides the algorithm with the ability to identify both the target subject/object in an image or frame as well as its background.

-

Boundary identification

It is a special type of annotation as it is commonly used in other types to demarcate the intended targets from the rest of the image or video frame. However, it may also be used in isolation when there’s a need to identify an object’s boundary instead of identifying it using that boundary.

This is the technique used to train the AI to recognize lines and curves. It is also employed in deep learning to train the many algorithms to delineate the target subject/objects for automated annotation.

Text Annotation

This is when an annotation is used to train AI/ML models to recognize textual data targets in a data set. It may at times be clubbed with a Voice/Audio Annotation tool, as in the case with Natural Language Processing (NLP). Since there are different project requirements similar to image/video annotation, several text annotation techniques are in place.

-

Entity annotation

It is concerned with the location, extraction, and tagging of target entities in text. Chatbots that use NLP models need this type of annotation to help them identify parts of speech, particularly named entities and keywords/phrases. Text annotation service experts thoroughly go through the data set, locate the required entities, highlight them, and label them by choosing from a predefined set of labels. To enhance the outcome of entity annotation, it is combined with entity linking.

There are three types of entity annotation, namely:

- Named Entity Recognition (NER): It is about annotating entities with proper names.

- Keyphrase Tagging: Pinpointing and labelling keywords or keyphrases in textual data sets.

- Part-of-Speech (POS) Tagging: The identification and annotation of functional elements of speech like adjectives, nouns, adverbs, etc.

-

Entity linking

This process connects the entities identified and annotated in entity annotation to large repositories of similar data. Search engine algorithms need it to improve their search capabilities and provide more accurate results. The labelled entities are linked within a text to a URL providing more information about them. Entity linking comes in two forms:

- Entity Disambiguation: Is about linking named entities to databases containing knowledge about them.

- End-to-end: It is a joint process where entities are analysed and annotated within a textual data set (also called entity recognition) alongside being engaged in entity disambiguation.

-

Text classification

Going by other names like text categorization and document classification, it is where annotators parse a body or a few lines of text to analyse it, distinguish its qualities like subject, intent, and sentiment, and classify it based on a list of predetermined categories. It is similar to the classification used for images and video. It is used when annotating keywords or keyphrases is insufficient, requiring an entire body of text to be annotated using a single label.

It consists of the following subcategories:

- Document Classification: Used whenever there’s a need to classify documents to sort and recall textual content.

- Product Categorisation: Most applicable to eCommerce platforms, it helps sort products/services based on intuitive classes and into categories for enhancing users’ search and overall shopping experience. It may be applied to product descriptions, images, or both. The appropriate category is chosen by annotators from a list of predetermined categories.

- Sentiment Annotation: Considers the sentiment, emotion, or opinion present in the text data for classification and labels the chosen segment accordingly. It is useful to detect the motion or sentiment present in an email or text message, especially when large data sets are involved, like an eCommerce site’s customer reviews.

-

Linguistic Annotation

Simply put, linguistics annotation is the process by which language in text or audio data sets is tagged. It is also called corpus annotation. Here, annotation professionals identify and flag the text or audio’s grammatical, phonetic, or semantic components. It finds use in NLP solutions like chatbots, search engines (including voice-based), virtual assistants, machine translation, etc. There are four types of it:

- Discourse Annotation: The process of associating anaphors and metaphors with their respective antecedent and postcedent subjects.

- Semantic annotation: Word definition annotation.

- Phonetic Annotation: Pertains to the labelling of natural pauses, intonation, and stress elements in speech.

- Part-of-Speech (POS) Tagging: Annotating different function words in texts.

Audio Annotation

The rise of the Smart Home, virtual assistant, chatbots, etc., has led to a demand for AI that can manage speech and voice similar to humans. This, in turn, has led to the rise of Audio annotation, especially to address NLP technology that underlies audio-based AI. There are different types of Audio annotation techniques applied as per a project’s goal.

-

Sound Labelling

The data annotation technique of sound labelling consists of experts separating needed sounds from a given audio data set and labelling them. It’s the technique used to identify and extract keywords and phrases in audio data samples.

-

Event Tracking

This technique is for situations that closely resemble real-world conditions where multi-source audio data is present. It helps evaluate the system’s performance in such conditions, particularly when there are overlapping sounds present.

-

Speech-to-Text Transcription

This technique is at the core of NLP technology and involves transcribing recorded speech into text. Simultaneously, the important parts of the speech, like words, sounds, and punctuation, are noted carefully and relevant terms are annotated.

-

Audio Classification

It’s the audio counterpart of image and text classification annotation, with the involvement of listening and analysing audio data by an algorithm to discern sounds and voice commands. It is at the heart of virtual assistant, automatic speech recognition, and text-to-speech program development. It comes in the following varieties:

- Acoustic Data Classification

It is used to identify the precise location of the sound’s recording. Annotators are responsible for recognizing different kinds of environments such as hallways, stone corridors, rooms, outdoors, etc. It finds purpose in monitoring systems and sound library maintenance.

- Music Classification

The audio classification process where different types of music get classified into their respective categories like genres, instruments used, ensemble, etc. It helps organise music libraries and improve recommendations.

- Natural Spoken Language Classification

It allows chatbots, virtual assistants, and similar technologies to understand human speech better by classifying its minute details like dialect, semantics, inflexions, etc.

- Environmental Sound Classification

It is used to match certain types of sounds to environments they are most commonly found in, such as the sounds of children running to a schoolyard. It helps develop better security systems based on sound detection and improves predictive maintenance.

Data Annotation and Labelling- Best Practices

A complex process like data annotation and labelling requires attention to be paid to its implementation. Otherwise, the results won’t reflect the expectations. Here are some of the best practices to prevent it:

- Collect a diverse set of data. For example, images should contain the same object with different illumination scenarios and angles. It helps counter bias and prevents the algorithm from getting confused.

- The data collected should also be specific, as in about the target subject and not something else that resembles it. It helps improve accuracy.

- A meticulous QA process should be established to ensure that the annotation/labelling quality is high. Various methods may be included, like Task auditing, targeting, and random QA.

- Follow a well-thought-out annotation guideline that clearly defines annotation and tool usage instructions. Use examples for labels when necessary. This prevents mistakes from happening.

- Create an efficient and seamless pipeline that works for your project, reducing costs and delivery times.

- Maintain clear and constant communication flow between all stakeholders via various activities and channels.

- Run a pilot to see how well your annotation setup works. Check the output and consider any feedback you get about it to improve the process until you get the best results.

In Conclusion

The potential for AI/ML is vast, and with accurate annotation/labelling, is expanding. The right processes, data, and business decisions will see to it that you get the best returns from the technology in terms of operational efficiency and overall profitability. The inclusion of a professional data annotation service partner helps amplify the benefits immensely due to their expertise and cost-effectiveness.

![Avast Driver Updater Key 2022 | Activation Key V2.5.9 [Free]- Avast Driver Updater Key 2021](https://vintank.com/wp-content/uploads/2021/02/Avast-Driver-Updater-Key-2021-100x70.jpg)

![Avast Premier Activation Code and License Key [Working] Avast Premier Activation Code and License Key](https://vintank.com/wp-content/uploads/2021/09/Avast-Premier-Activation-Code-and-License-Key-100x70.jpg)